When it comes to vulnerability management, you can get the first 20% up and running without many issues. Deploying a management panel, adding sensors, running discovery scans. Most of these are fairly intuitive tasks and a few platforms will have wizard-like interfaces that will help you get to the 20% mark within a few hours. However, to get the most out of your VMS (Vulnerability Management System), you’ll need to work within and outside the security team, with a mixture of stakeholders, to ensure your efforts are worthwhile and that the vulnerabilities that are detected, are handled quickly and efficiently.

Whatever your ticketing and task management platform, it’s important that vulnerability management solutions do not operate in a vacuum. It isn’t necessarily true or scalable that your VMS operator should be the person that also fix or patch the vulnerable system, or be responsible for identifying who the vulnerable system belongs to. In large enterprises, or even small ones, identifying systems owners can be challenging. Who owns the operating system? Are they the ones responsible for patching firmware? Who owns the web-server? Are they the ones that own the application?

Each system can have varying levels of complexity as well as a myriad of owners that own different aspects of the affected system. Documenting who owns the system is important, however, the scans should be setup in such a way that scanned assets also include asset identifying metadata that can reference ownership of the system or platform. It’s a good practice to include this information in a summary or description field of the scan so its handy for automation.

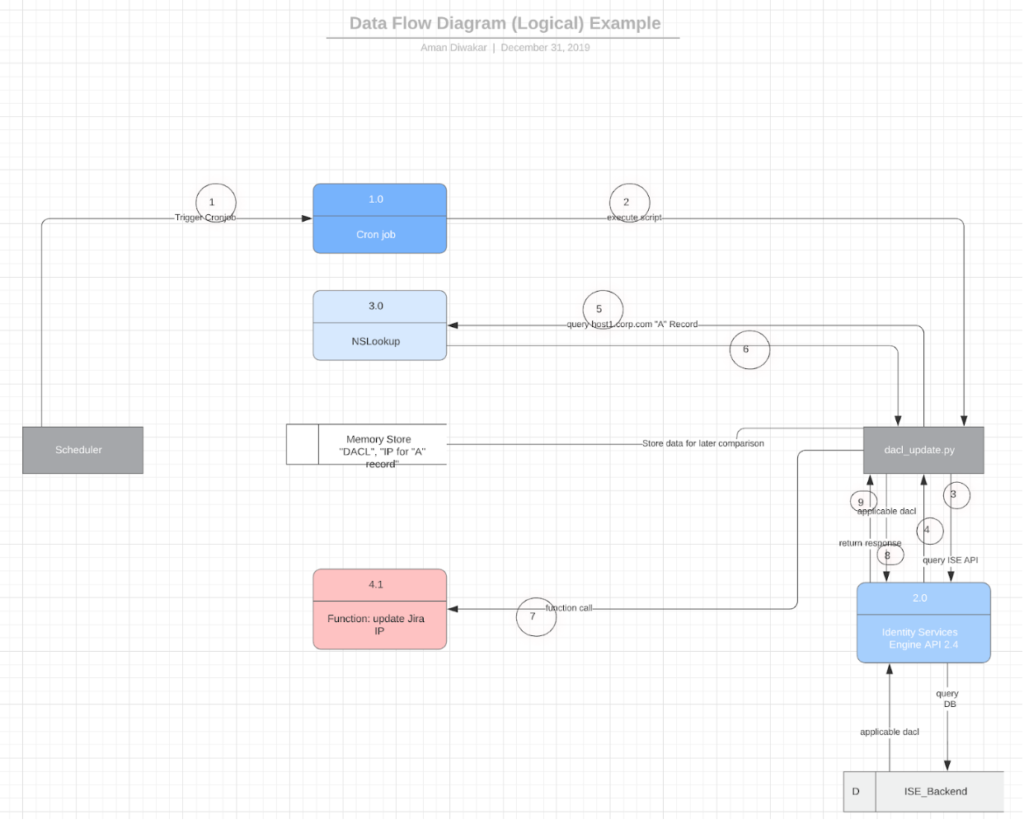

Once ample metadata is provided, automation can be used to create and assign high severity vulnerabilities to the appropriate owner. This ensures that it gets handled in a timely manner and that the vulnerability is not stuck within the confines of the VMS itself. Whether the ticketing system is Zendesk, ServiceNow or Jira, each platform can be configured via scripting and API services to create and assign tickets with the appropriate severity rating.

Off-course, in order to get the highest fidelity scans possible, the scanning engine should get accurate information about the system that is being scanned. VMS systems should be configured with either certificate based or other forms of authentication in order to log into the OS or application in order to accurately assess that system for vulnerabilities. Unauthenticated scans are only good for asset management but not for vulnerability management. Authenticated scans increase the signal to noise ratio and help identify critical vulnerabilities and assign the appropriate SLA.

Ensure that your VMS system has only the minimum credential level required to perform the appropriate scanning. This can be accomplished with either a sudoers file or setting appropriate privilege levels for the commands required. LDAP and Hashicorps vault are good examples of centralized authentication systems. It is a common and recommended practice to rotate credentials on a set interval and log all activities performed by the system. This allows quick detection and prevention of unintended actions.

Last but not least, establish realistic SLAs and MTRs (mean time to remediation) and track these metrics, giving the power back to the system owners to incorporate their own patching strategies for their respective systems. The patching strategy should automatically alert the Scan engineer that a remediation task has been performed. It should allow the ticketing platform to kick off another scan task to validate the fix by calling the VMS system via the API.